Why Your Company Should Wait Until Fall 2023 to Use Ai

If today's Ai were a priest, it would be the worst priest in town

Back in the USSR they had public sparkling water machines installed right in the middle of the street. Anyone could come up, grab a glass (one glass for all), feed the machine a coin and enjoy the carbonated wonder. I don't know about you, but I've got questions. Mainly hygiene-related ones.

Today's Ai is a lot like those machines. It's public. Anyone can use it. One glass for all: kids, company exexcutives, criminals, government officials.. our lips are touching the same glass over and over again.

What are the implications? Well, security above all. Remember how JPMorgan Chase had been feeding confidential business data to ChatGPT just recently? The employees who were feeding ChatGPT confidential data were doing so for a variety of reasons. Some were simply curious about what the AI could do with their data. Others were hoping to use the AI to help them with their work. And still others were simply trying to be helpful.

Whatever the reason, the employees' actions were a serious security breach. By feeding ChatGPT confidential data, they put the company's intellectual property and financial data at risk. They also put the company's customers at risk, as the data could be used to identify and target them for fraud or other malicious activity.

It's like the entire town confesses to one priest, but the priest could care less about the sacrament of confession. That's ChatGPT and Bard to you.

These companies have banned using Ai by their emoloyees:

- JPMorgan Chase

- Bank of America

- Citigroup

- Samsung

- Verizon

- Accenture

- Ikea

What’s at stake

1. Security

The biggest reason why these companies and your company should not use AI in its current state is that public AI is not safe. Public AI refers to the AI models that are readily available for use on the internet. These models have been trained on large datasets and can be used for various tasks, such as image recognition, language translation, and speech recognition.

The problem with public AI is that it can be easily hacked, and your company’s confidential data could be stolen. Hackers can gain access to the data that is being fed into these models and use it for malicious purposes. For instance, a hacker could use the data to train their own AI model and gain a competitive advantage over your company.

Your competitors may gain access to your private and confidential data

For example, let’s say you’re Amway using public AI to analyze customer data. Avon could hack into the AI model and steal this data, giving them insights into Amway’s customers’ preferences, behaviors, and purchasing patterns. This would put Amway at a significant disadvantage in the market.

2. Customization

Another issue with public AI models is that they are not tailored to your company’s specific needs. Public AI models are trained on large datasets, and while they may work well for some general tasks, they may not be the best fit for your company’s specific needs.

For example, let’s take an abstract health & wellness direct selling company that is using a public AI model to analyze IBO and customer data. This AI model will not be trained to handle the unique characteristics of your IBOs or customers, such as their comp plan, location, order history, place in the tree, etc. This could lead to inaccurate analytical conclusions putting your corporate plans at risk.

What to do

Having your own trained AI model can address many of the issues associated with public AI models. Here are some compelling reasons why your company should consider having its own trained AI model:

1. Tailored to your company’s specific needs

When you have your own trained AI model, it can be tailored to your company’s specific needs. You can train the model on your own dataset, ensuring that it is optimized for your company’s unique characteristics. This can lead to better accuracy, faster processing times, and more efficient decision-making.

For example, let’s say you’re a financial services company that is using AI to detect fraud. By training your own AI model on your own dataset, you can ensure that the model is optimized for your company’s specific fraud detection needs. This can lead to more accurate fraud detection, reducing the risk of financial losses.

2. Private and confidential data is protected

When you have your own trained AI model, you can ensure that your private and confidential data is protected. You have complete control over the data that is being used to train the model, and you can implement strict security protocols to ensure that the data is not stolen or hacked.

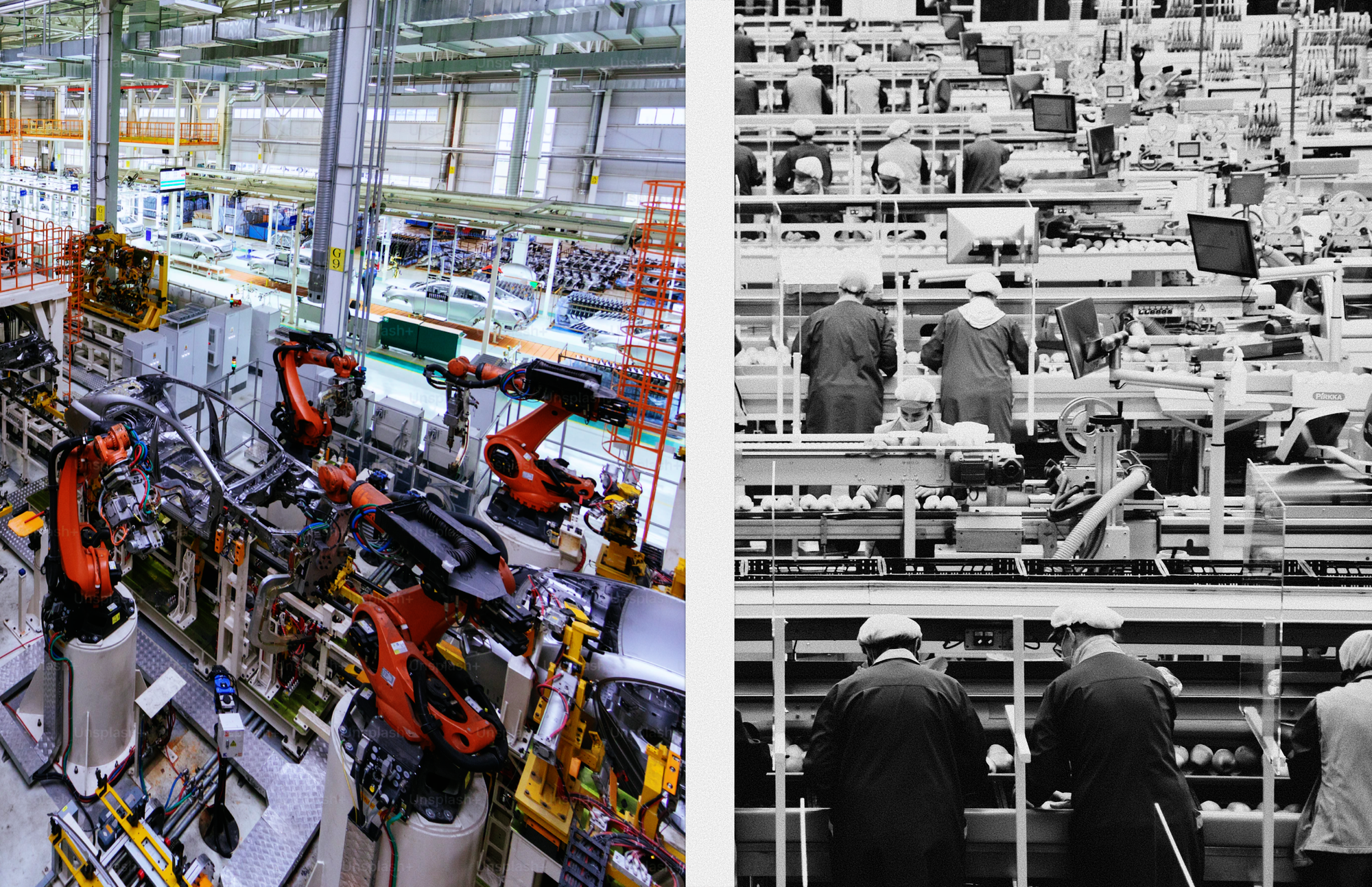

For example, let’s say you’re a product manufacturing branch of an MLM company that is using AI to improve production processes. By training your own AI model, you can ensure that your production data is kept private and confidential. This can prevent your competitors from gaining access to your production processes and improving their own processes at your expense.

3. Improved accuracy and performance

When you have your own trained AI model, you can fine-tune the model to improve its accuracy and performance.

Let’s say using AI to personalize recommendations for your customers on your e-commerce website. By training your own AI model on your own dataset, you can fine-tune the model to improve its accuracy and performance. This can lead to better recommendations for your customers, increasing customer satisfaction and sales.

How to do it

Most non-technical companies can either develop their own expertise in AI over time, which is difficult in today's hiring market, or wait until a product is available for their industry.

Direct Selling is in luck though, as there is already an AI-powered e-commerce and mobile platform called Treel AI in the works. Treel AI is a Los Angeles-based tech company (owned by techery.io) that exclusively serves MLMs. Treel AI is scheduled to begin selling its solution in the summer of 2023.